AI Training Servers

Powering the Future of AI with MiTAC Training Servers

AI training involves processing vast datasets to build and refine sophisticated models. This process demands immense computational power, high memory capacity, and fast data access. MiTAC's AI training servers are engineered to meet these challenges head-on, delivering exceptional performance and efficiency.

Unmatched Performance

Equipped with cutting-edge multi-GPU configurations, featuring the latest AMD and Intel® processors, supporting the advanced AMD or NVIDIA® GPUs, MiTAC AI servers accelerate the training times, bringing your AI models to life faster.

Blazing-Fast and Large Capacity Memory

Cutting-edge high-bandwidth DDR5 memory, with up to 6TB of RAM, ensures that your AI models have the necessary resources to efficiently process massive datasets.

High-Speed Storage

Leverage the power of NVMe SSDs to rapidly access and store your training data, minimizing training bottlenecks.

Scalable Architecture

The modular design allows you to seamlessly scale your server infrastructure as your training datasets and model complexity grow.

Advanced Cooling Solutions

Integrated liquid cooling options to maintain optimal performance and prevent overheating.

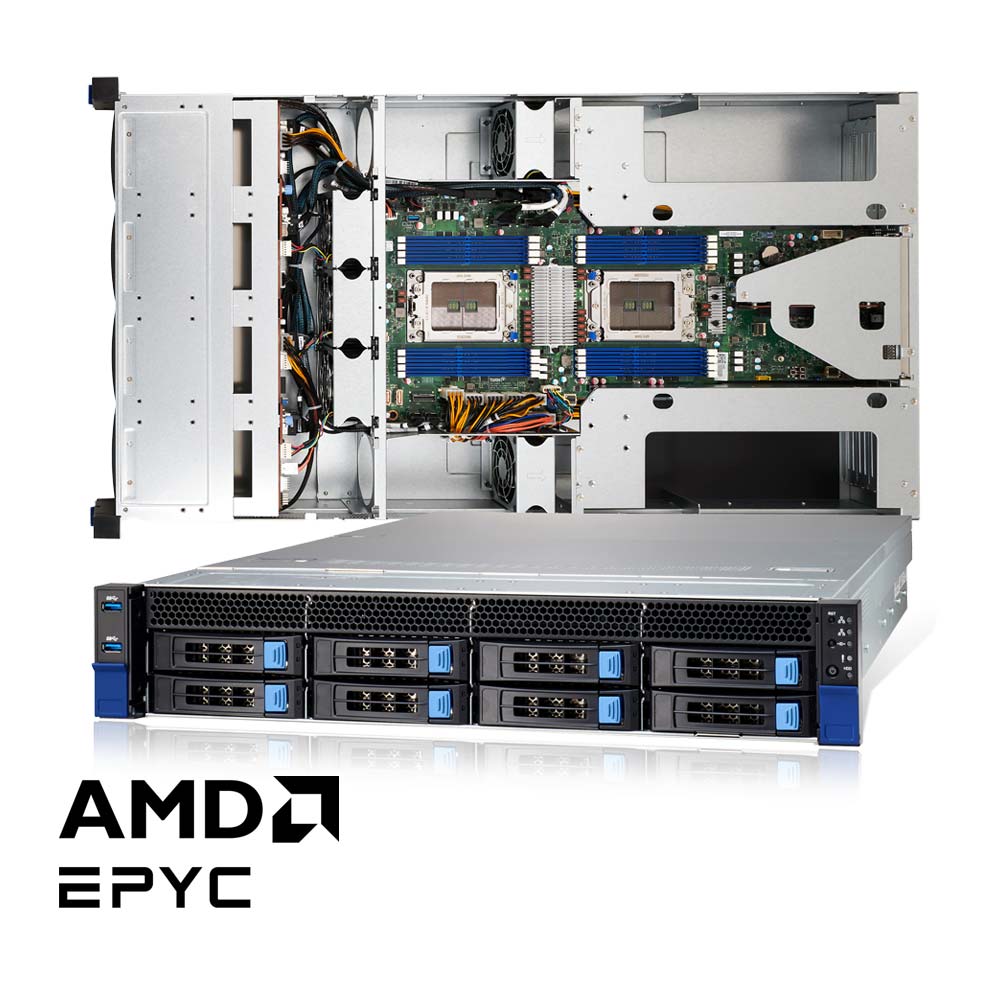

AMD Instinct™ Accelerators

Unleash AI Training Power with AMD Instinct™ MI300 Series Accelerators

The MiTAC G8825Z5 AI Training Server, powered by the cutting-edge AMD Instinct™ MI300 series accelerators, delivers unrivaled performance for demanding AI training workloads. Supporting up to eight AMD Instinct™ MI325X accelerators, this server delivers the computational power needed to train large-scale neural networks and deep learning models with exceptional speed. Its large memory and high-bandwidth capacity handle vast datasets in AI fields like natural language processing, computer vision, and recommendations. Optimized for multi-GPU training, with advanced cooling and energy-efficient design, it's the ultimate AI training platform for innovation.

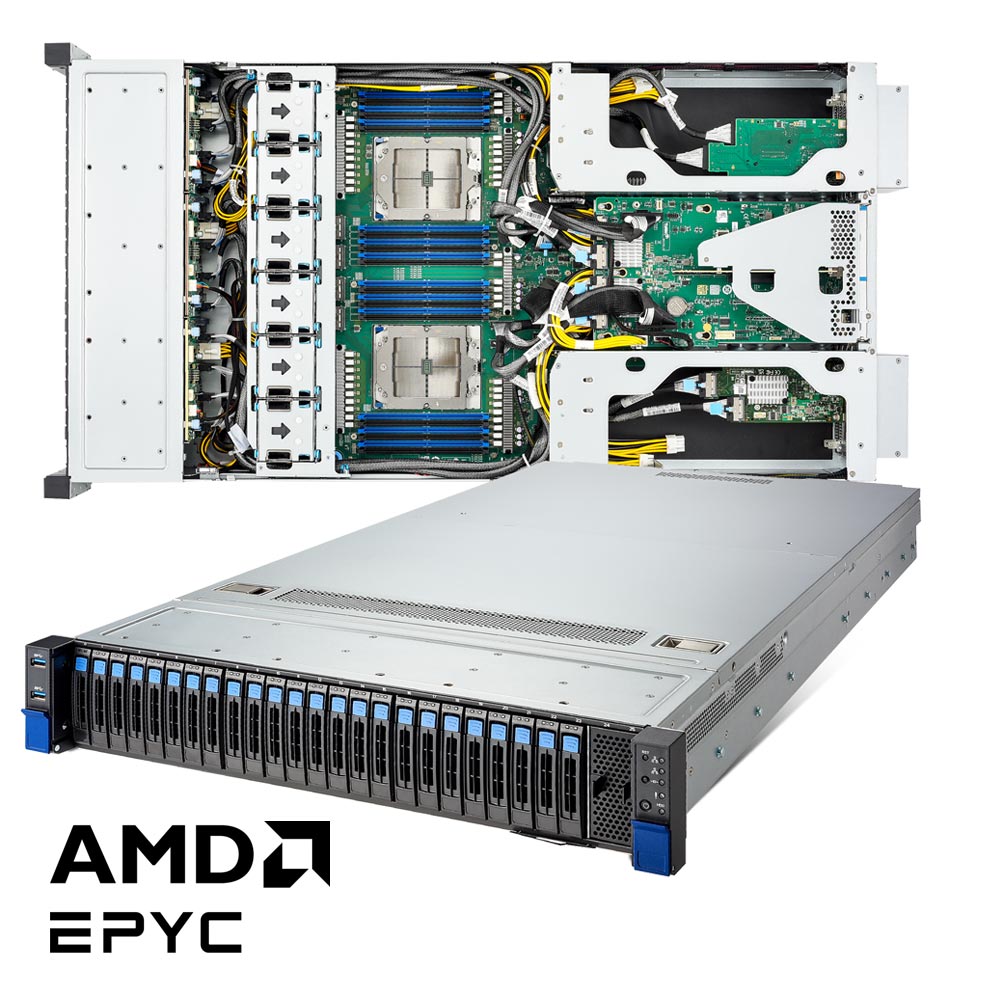

FT83A-B7129

4U dual-socket 3rd Gen Intel® Xeon® Scalable AI / HPC server platform with supporting up to 10 GPU cards and 12 3.5" SAS/SATA

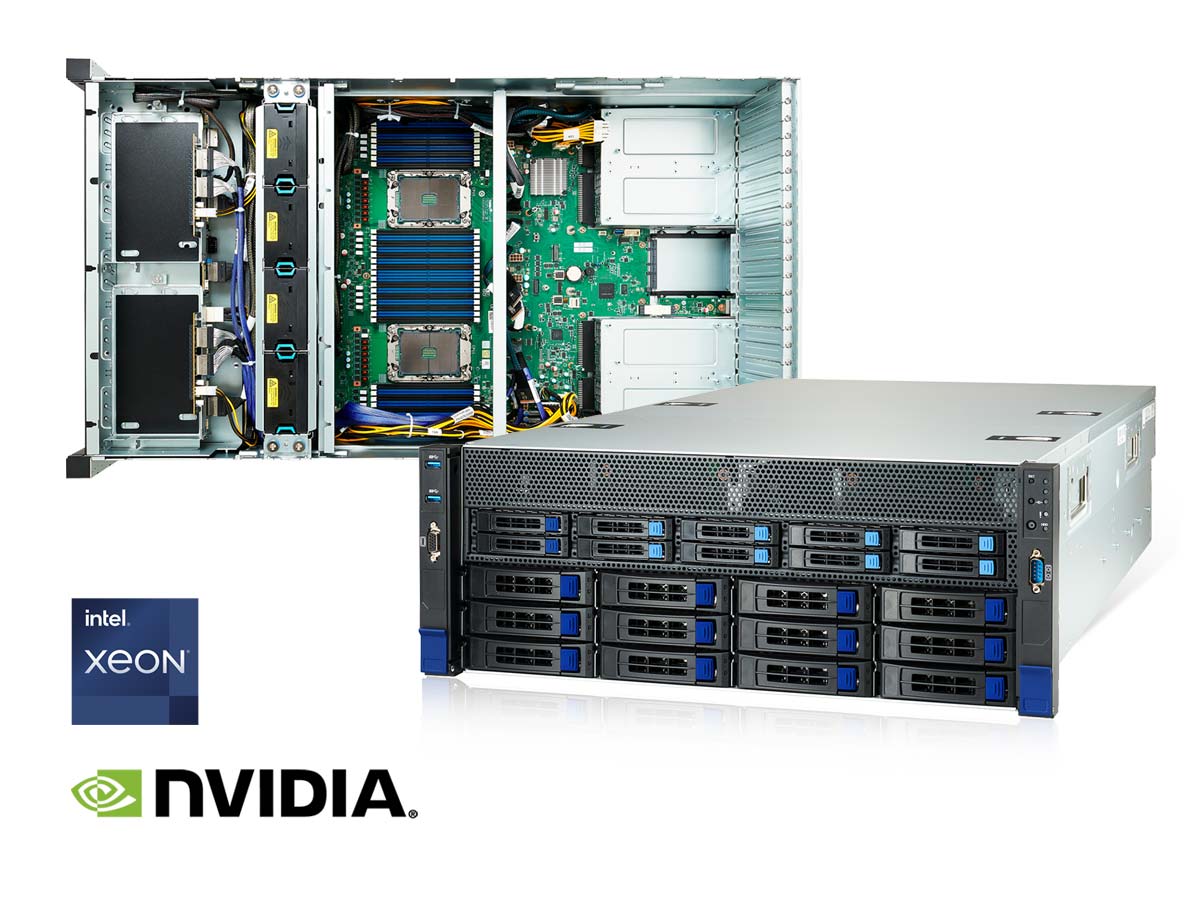

NVIDIA H100 GPU

Redefining AI Training and Data Analytics with NVIDIA H100 GPU

The NVIDIA H100 GPU's innovative technology dramatically accelerates large language models (LLMs), providing industry-leading performance for conversational AI. Servers equipped with the NVIDIA H100 GPU deliver unparalleled performance in AI training and data analytics. Notably, the Tyan FT83A-B7129 server has been certified to support 8 NVIDIA H100 GPU accelerators, making it an exceptional choice for maximizing AI capabilities.

AI Inference Servers

Accelerate Decision-Making with MiTAC Inference Servers

AI inference involves using trained models to make predictions or decisions in real-time. This requires servers that can deliver quick, reliable responses with minimal latency. MiTAC's AI inference servers are optimized for high throughput and low-latency performance, making them ideal for deploying AI applications in production environments.

Optimized Performance

MiTAC AI Inference Servers servers are designed for efficient inference workloads, delivering accurate and timely results without the need for excessive processing power.

Cost-Effective Deployment

Choose from a variety of configurations, including single-GPU or CPU-based options, to meet your specific inference needs and budget constraints.

Low-Latency Networking

Features high-speed network interfaces to ensure seamless data input and output for real-time applications.

Scalable Infrastructure

As your inference demands grow, MiTAC AI inference servers can easily scale up to handle increased workloads.

Low Power Consumption

MiTAC AI inference servers are built with energy efficiency in mind, minimizing your environmental impact and operating costs.

AI Inference Workloads Powered by MiTAC 4-GPU Servers

Empowering AI Inference and Data-Intensive Applications with Intel® Data Center GPU Max and Flex Series

The Intel® Data Center GPU Max Series delivers unmatched performance for compute-intensive tasks, while the Flex Series offers versatility and efficiency for a broad range of workloads. With support for both GPU series, MiTAC M50FCP and D50DNP servers meet the evolving needs of modern data centers, ensuring optimal performance and flexibility.

Explore all MiTAC AI Servers or Contact Us to discuss your specific AI needs!

SUBSCRIBE NOW to get the latest news.